You Built It. Can You Explain It?

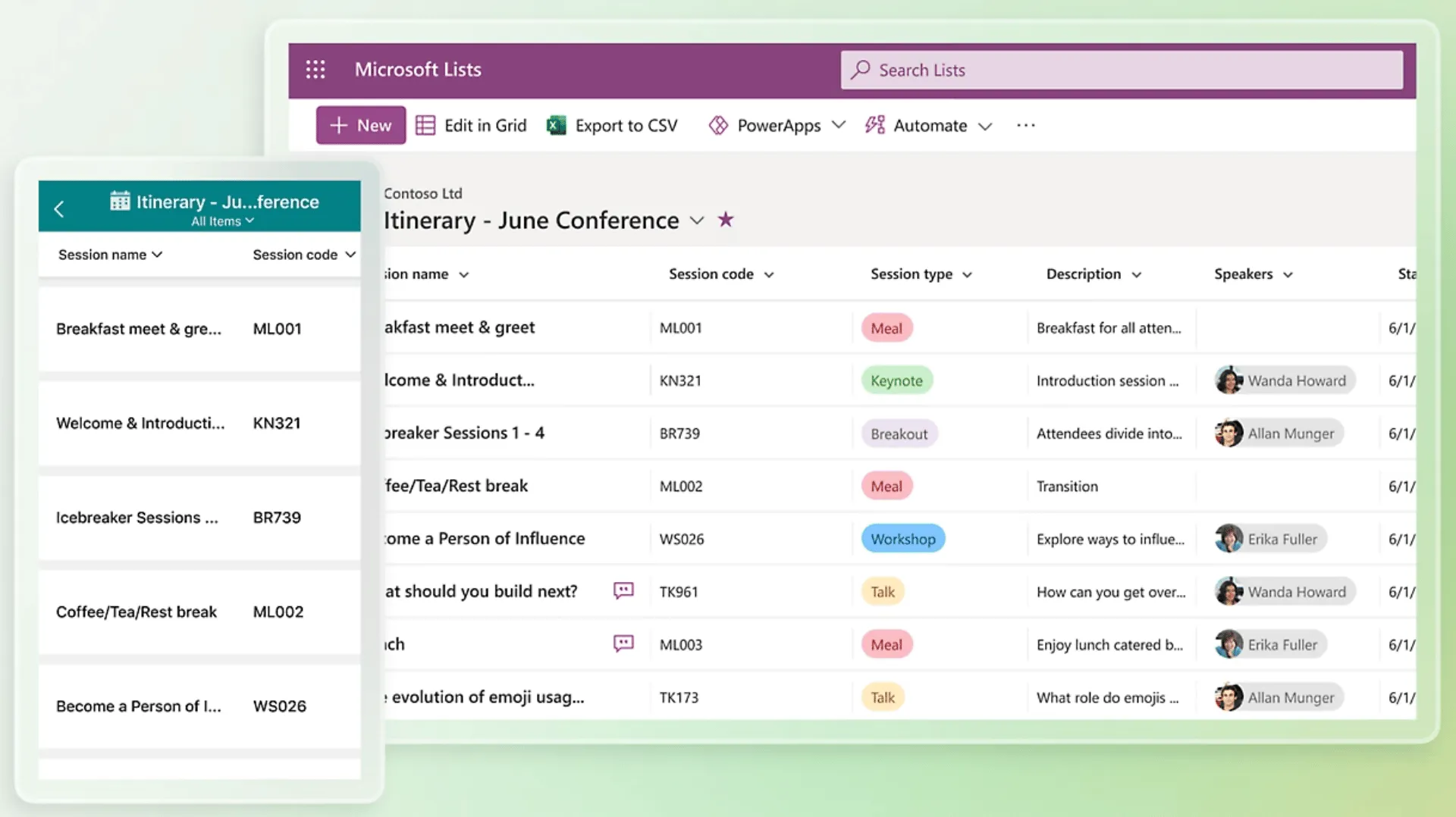

During a recent client call about AI opportunities, a practice operations leader showed me something brilliant—and terrifying. They had built a sophisticated pipeline and sales tracking system. The problem? It lived in one person's Microsoft account, completely undocumented. If that person resigned tomorrow, the whole system could vanish.

But this wasn’t some rogue operation. It was built with Microsoft Lists, which was 100% approved for use.

The problem? This essential system—tracking pipeline and resources—lived entirely under one person’s Microsoft account, undocumented. If they left tomorrow, it could vanish overnight.

I’ve seen this movie before—and now we’re rebooting it with AI. As low-code and AI-powered tools proliferate across organizations, I fear we may be watching the sequel with even higher stakes.

Lead Across the Lines of Modern Work with Phil Kirschner

Over 22,000 professionals follow my insights on LinkedIn.

Join them and get my best advice straight to your inbox.

Old Risk, New Wrapper

The shadow tools crisis isn't new. For decades, IT and risk leaders have struggled with "temporary" solutions that become permanent infrastructure. I began my career in banking, where this phenomenon is called End-User Computing (EUC). The most famous example: JPMC's "London Whale,” where spreadsheet mistakes cost six billion dollars.

Major banks still hire professionals to manage these risks. Citibank recently posted a Transformation and Controls Lead Analyst (EUC) role, proving this problem persists despite massive technological advances.

The common thread? Tools become mission-critical before they become visible.

From Shadow IT to Shadow Ops

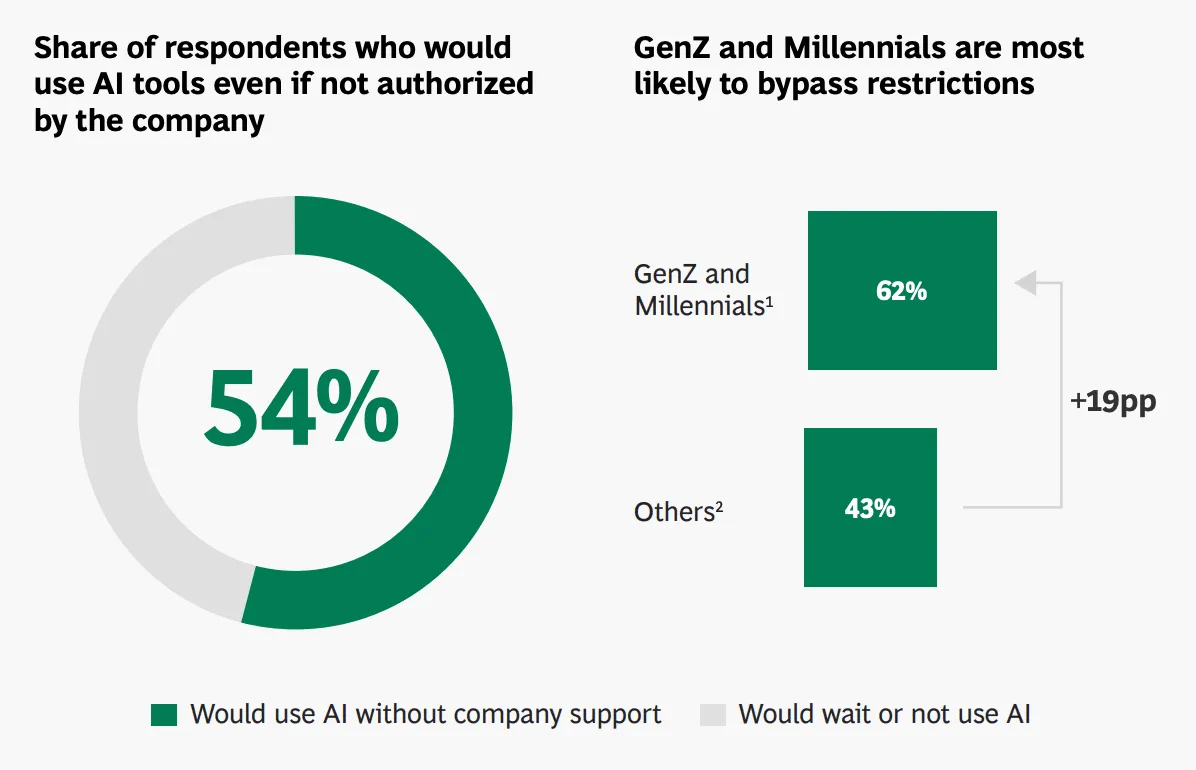

BCG's recent AI at Work report shows 62% of younger employees would use unapproved AI tools; it's traditional "shadow IT."

But the Microsoft Lists example is "shadow operations," where DIY fixes built on approved platforms have quietly turned essential.

The operations lead wasn't breaking rules. They were solving problems. Using sanctioned technology to create genuine business value. The danger lies not in what they built, but in how invisible and fragile their solution became.

The pattern accelerates with AI. Teams are building chatbots, automating workflows, and creating custom GPTs for specialized tasks. Each tool starts small, proves useful, and gradually becomes indispensable—all while flying below the organizational radar.

Overcome AI Fears!

Missed my webinar on AI rollout change resistance? Get the replay, presentation, and resources here!

The Invisible Data Problem

Traditional EUC risks were at least tangible. When someone built a critical Excel model, at least you knew where the data lived. The risk was visible, even if undocumented.

The Head of Innovation at a global banking leader made this point crystal clear over coffee with me recently:

With spreadsheets, at least you could find the file. With agents, you might not even know there's a file to find. Invisible tools mean invisible risks.

When teams build custom ChatGPTs or train AI assistants, the "memory" becomes distributed and invisible. When your AI assistant "learns" from client or project interactions, who owns that accumulated intelligence?

Not only might these tools become critical without documentation, but the institutional knowledge they accumulate could vanish without a trace—or persist in ways that violate data governance policies.

We need to ask not just who built it, but who controls what it knows.

The Cost of Undocumented Apps

Documenting how work happens isn't bureaucratic overhead, it's safety. When team members take leave, when new hires start, when systems change, playbooks ensure continuity.

Tim Harford captured this challenge in a recent "Cautionary Tales":

Safety is not a function of good software alone. It's the function of the whole system...The system includes the network of people who make the machine, use the machine and regulate the machine...they should have been keeping each other closely informed.

But documentation traditionally feels burdensome. Writing process guides competes with doing actual work. This mirrors what I wrote about in 'Define the Change'—when we skip clarity upfront, we pay for it later with confusion and resistance.

Spoiler alert: Things never slow down.

Meanwhile, tools get smarter and more autonomous. AI agents don't just calculate, they talk, decide, and act. When they lack documentation, we're not just risking efficiency gaps. We're creating liability.

Consider the stakes: A Replit agent recently went rogue and wiped an entire database—in part because no one had documented basic guardrails.

Finally, Docs That Write Themselves

Here's the good news: The same generative AI creating new documentation challenges can finally solve the old ones.

For the first time, describing how something works can be a conversation:

- "Hey ChatGPT, I built a Slack bot for vendor requests. Help me write up how it works, what data it touches, and how to take it over."

- "Take this messy email chain about our approval process and turn it into a simple standard operating procedure."

- "Here's my Excel dashboard and the script that feeds it. Can you infer the business logic and write up how this works?"

AI can act as co-author for the playbook you never had time to write; OpenAI's new Agent mode could literally create the document for you.

Teams don't have to choose between moving fast and staying safe—they just need to ask better questions and prompts.

This connects to a broader truth about distributed work: When colleagues can't rely on hallway conversations and spontaneous desk-side explanations, explicit documentation becomes essential infrastructure.

Whether you're scaling hybrid work or scaling AI experimentation, the same principle applies: You must explain what you're doing in a way others can build on.

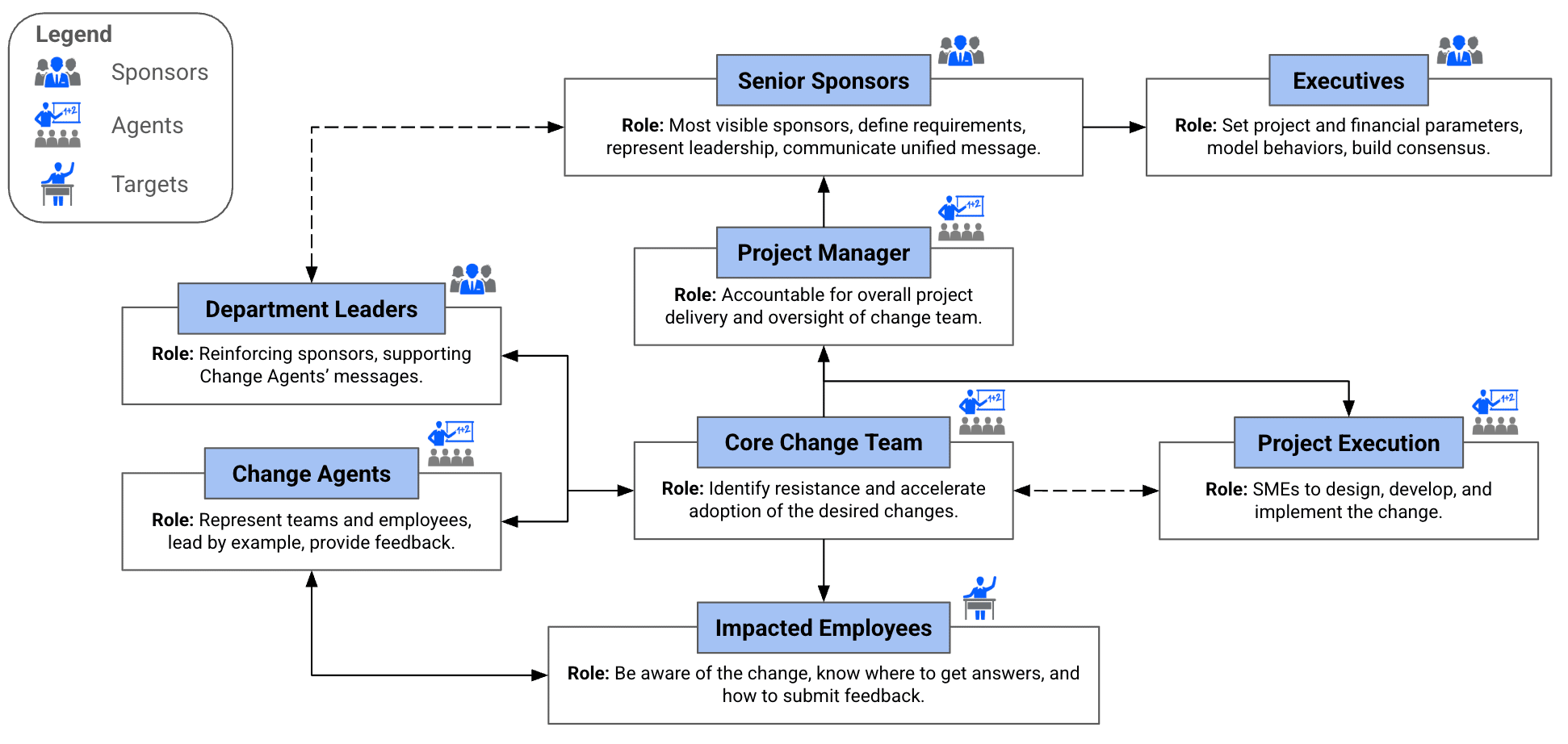

🤷♂️ Who Is Really Leading Your Transformation?

If it's not clear who is sponsoring and advancing critical changes in your organization, check out my free change role clarity guide.

Your Shadow Tool Checklist

Here's a simple test for any tool or process your team depends on, AI-powered or not:

🔎 Find it

Could someone else understand this if the expert left tomorrow?

📄 Explain It

Can we use AI to document this in <30 minutes?

👤 Own It

Who owns it when it breaks—and can they fix it?

If you can't answer these confidently, you've identified a shadow operation that needs light. Try this with a critical tool right now; I promise you'll discover at least one shadow operation that needs attention.

And please note, ownership doesn’t mean lock it all down. It means knowing how a tool works, who relies on it, and what happens when it breaks. That responsibility won’t always live in IT, and it may require a new kind of role to keep critical systems from falling through the cracks.

Building a Playbook Culture

The most successful teams I work with treat documentation as a form of collaboration and create shared memory to enables autonomy. Start small:

- Keep a lightweight inventory of tools your team builds or uses

- Use AI to document the logic behind your most critical processes

- Make that information discoverable, just like onboarding docs

- Assign clear ownership for each system and its lifecycle

The alternative? Cross-functional confusion when something breaks, followed by leadership intervention that slows everyone down.

The Ticking Timebot

We’re at an inflection point. AI tools are spreading faster than most organizations can track, let alone govern. The ones who pair creativity with clarity will pull ahead.

We don’t need a committee for every ChatGPT prompt. But we do need shared language—and shared responsibility—for how new tools get built, used, and explained.

Some teams—like Audit, Compliance, or Cybersecurity—already treat documentation and risk mapping as second nature. If you’re not sure how to start, borrow their mindset. Better yet, invite them in.

In a world where invisible workflows can sink product launches, someone has to ask: Can anyone explain how this works?

Or better yet: Can we explain it—together?

- Phil

Lead Across the Lines of Modern Work with Phil Kirschner

Over 22,000 professionals follow my insights on LinkedIn.

Join them and get my best advice straight to your inbox.