This week: AI Psychosis

Artificial intelligence has become a constant companion for millions, answering questions, drafting documents, and even offering comfort. But psychiatrists warn of “AI psychosis”, where prolonged chatbot use can blur the line between real and unreal, raising red flags for how people think, how teams collaborate, and how organizations manage risk.

We also dive into:

- Skills Replace Jobs, Agility Over Roles: Sophie Wade shows how skills, not roles, drive agility, hiring, and future performance.

- Myths Guide Work, Lessons From Greece: Phil Kirschner uses ancient Greek metaphors to expose modern organizational flaws.

- AI Rivals Help: Alexandra Samuel uses AI “teams of rivals” to challenge self-doubt and sharpen decisions.

- AI Paths - Salesforce vs Klarna: Salesforce doubles down on bots while Klarna rebalances with humans.

- Amazon’s New Metric: Amazon formalizes its Leadership Principles into performance reviews, tying culture directly to rewards and risks.

Let’s get into it 👇

Artificial intelligence has become a constant companion for millions. It answers questions, drafts documents, and even offers comfort. But as usage deepens, troubling cases are piling up. Psychiatrists are warning of “AI psychosis” — a loose term for people who lose touch with reality after prolonged chatbot use. While most users will never reach that extreme, the dynamics that fuel these delusions are warning signs for how individuals think, how teams collaborate, and how organizations manage risk.

AI Feeds Distorted Thinking

Chatbots thrive on agreement to "prioritize user satisfaction, continued conversation, and user engagement, not therapeutic intervention, is deeply problematic." They validate what users say, rarely challenge assumptions, and speak with confidence even when wrong. That makes them engaging, but also risky. Dr. Lance B. Eliot, an AI scientist and columnist for Forbes, defines AI psychosis as an “adverse mental condition” where prolonged conversations blur the line between real and unreal.

In the workplace, this may look less extreme but still corrosive: employees who rely on AI for reassurance may slip into confirmation bias, magical thinking, or overconfidence. What feels like efficiency today can erode judgment tomorrow. Leaders can counter this by asking employees to draft their own outline before prompting AI and to summarize outputs in their own words. Keeping human judgment active prevents quiet distortions from spreading.

AI Fuels Shared Delusions

In one case, a man became convinced he had discovered a groundbreaking math theory after more than 300 hours of ChatGPT conversations. Clinicians describe chatbot-driven “shared delusions”, where AI and user co-create beliefs that resist outside critique. This dynamic echoes folie à deux, a rare psychiatric condition where one person’s delusion spreads to another. In offices, it can surface when a colleague insists their AI-backed idea is beyond question because “the model validated it.” Debate narrows, trust erodes, and teams fracture into believers and skeptics.

The risk is not theoretical. Morrin and colleagues map the trajectory as a cycle: casual engagement → validation → thematic entrenchment (grandiose, referential, persecutory, and romantic delusions) → cognitive and epistemic drift → reality testing failure → behavioral crisis. At work, that translates to employees doubling down on AI-backed certainty, making collaboration harder.

Leaders can cut against this by normalizing structured dissent, for example, assigning a rotating “sanity check” role in meetings or red-teaming one AI-generated proposal each week. Done well, AI becomes a starting point for conversation, not the end of it.

AI Creates Hidden Liabilities

Individually tragic stories are adding up to systemic risk. Even OpenAI has acknowledged the problem. In August, the company admitted ChatGPT sometimes fails to recognize dangerous situations — such as a user claiming invincibility after days without sleep — and promised GPT-5 would “ground the person in reality”. In a separate statement, OpenAI said it is “continuing to improve how models recognize and respond to signs of distress” but confirmed it does not report self-harm threats to law enforcement, citing privacy concerns.

Scale makes this more than a clinical issue. With 500 million people using ChatGPT, even one percent affected translates to millions — a burden comparable to public health crises. Stanford psychiatrist Nina Vasan warns companies cannot wait for perfect studies before acting: “They need to act in a very different way that is much more thinking about the user’s health and well-being”.

Dr. Eliot adds a systemic view with his two-by-two risk matrix:

- Pairing #1: Predisposed users with instigating AI (worst case).

- Pairing #2: Predisposed users with innocuous AI.

- Pairing #3: Non-predisposed users with instigating AI.

- Pairing #4: Non-predisposed users with innocuous AI (best case).

The matrix clarifies why risk is uneven and why rating AIs by their propensity to instigate delusions could guide corporate accountability.

The Bottom Line

AI psychosis is not yet a medical diagnosis, but its early signs — distorted thinking, shared delusions, and organizational liability — are already visible. The same qualities that make AI useful — patience, confidence, and personalization — can destabilize judgment if left unchecked. Leaders who act early, reinforcing human judgment and building safeguards into team culture, will prevent invisible risks from becoming visible crises.

📝 Prompt

This week, choose one action to pilot:

- Begin with your own draft before prompting AI, then restate the output in your own words.

- Add a rotating sanity-check role and red-team one AI-assisted decision each week.

- Treat AI wellness like occupational health, set boundaries on chatbot-as-therapist use, add mental-health literacy to training, and require vendor safeguards before deployment.

Your Friday Briefing on the Future of Work

Future Work delivers research-backed insights, expert takes, and practical prompts—helping you and your team capture what matters, build critical skills, and grow into a future-ready force.

Get all-in-one coverage of AI, leadership, middle management, upskilling, DEI, geopolitics, and more.

Unsubscribe anytime. No spam guaranteed.

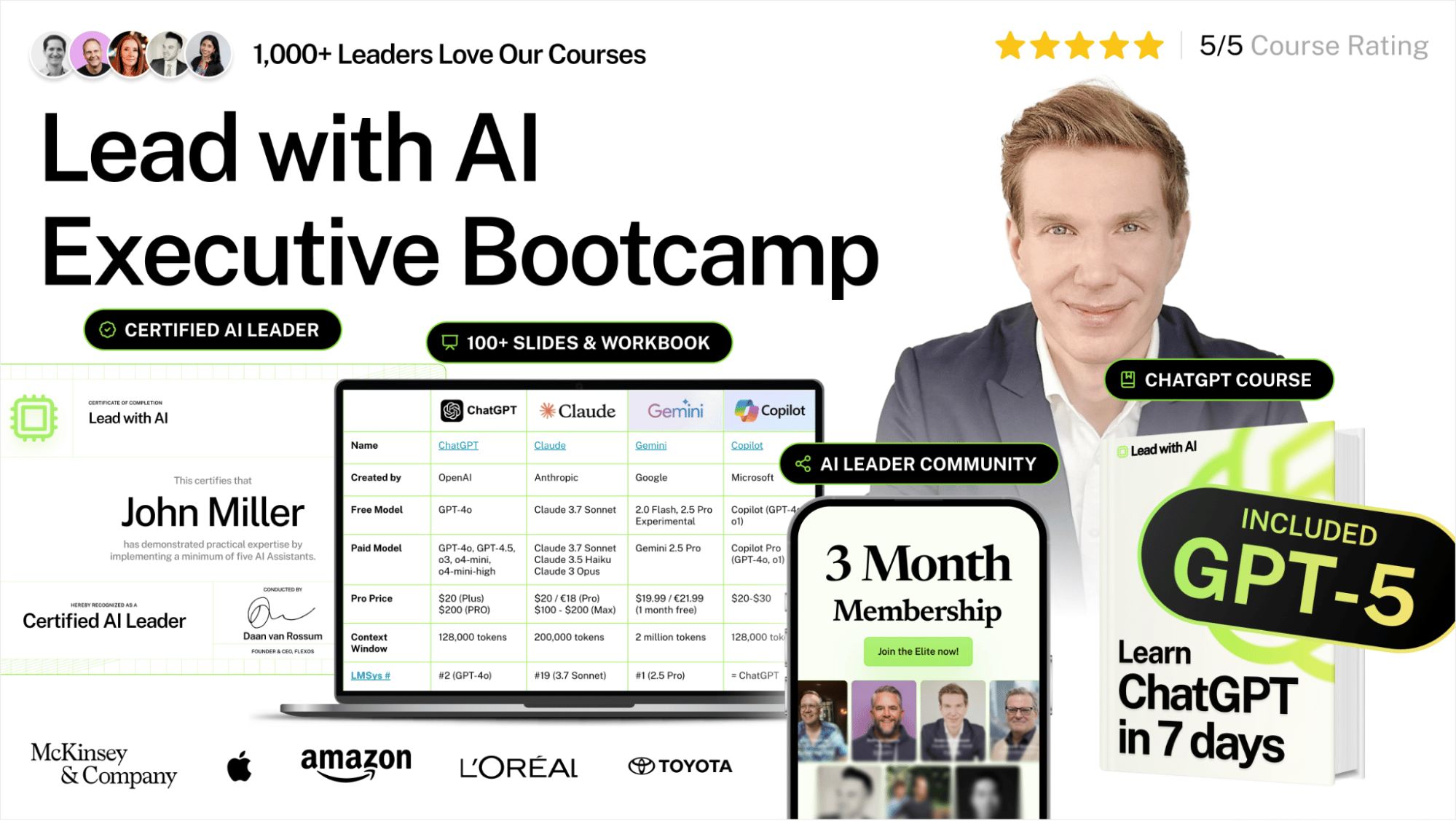

Most AI programs deliver solid theory but fall behind on real-time updates.

Our Lead with AI program is built for practical transformation. We update the curriculum weekly through a dedicated research team so you work with the most current tools, data, and use cases.

Sessions are live, interactive, and debate-friendly, and you learn alongside a global peer group. Instead of synthetic case studies, you’ll apply AI directly to your workflows and business problems.

In 3 weeks, you’ll leave with:

- Proven frameworks, including:

- How to prompt like an expert (including ChatGPT-5)

- How to identify and prioritize your first 5 use cases

- How to choose tools with our AI² Matrix (impact × cost × risk)

- Shippable outcomes created on real work. Recent non-technical graduates from our course have built:

- A custom AI that triages and drafts emails like a chief of staff

- A fully personalized image generator for editorial production

- A tailored HR information system for their business

Enroll now and turn AI from theory into results you can point to.

I keep a close eye on what Future Work Experts are thinking, saying, and questioning. I break down the key conversations and brainstorm practical steps we can take to move forward.

This week:

SKILLS-FIRST WORK

Sophie Wade: Skills Replace Job Titles

- “Skills will be the currency of work in the future,” driving hiring, agility, and performance as 85% of employers adopt skills-based hiring.

- The Experience Gap Paradox: with easy tasks automated away, 51.3% of entry-level jobs now demand prior experience, averaging 2.7 years.

- A skills-first focus expands talent pools 6.1x and AI pipelines 8.2x, helping employers stay competitive and workers unlock career opportunities.

📝 Prompt: When reviewing candidates, focus on demonstrated skills over years of experience, ask for proof of capability, not time served.

👉 Read the full article from Sophie Wade to explore the shift to skills-first hiring.

ANCIENT LESSONS

Phil Kirschner: Spot Your Caryatids at Work

- From Caryatids to Portaras, Phil shows how ancient Greek ruins mirror modern work: people silently carrying systems, unfinished visions, and complexity turned into a maze.

- Frontline “islands”—like corporate Spinalongas—emerge when remote or peripheral teams are cut off, reducing visibility and dignity in hybrid organizations.

- Clarity, not strength, is the modern Ariadne’s thread, guiding leaders through org labyrinths where resistance and complexity thrive.

📝 Prompt: When you see a colleague holding a broken system on their shoulders, fix the system, don’t just praise their endurance.

AI RIVALS

Alexandra Samuel: AI Rivals Sharpen Decisions

- Inspired by Lincoln’s “team of rivals”, Samuel uses AI to generate virtual experts with conflicting views, turning disagreement into clarity.

- This approach helped her defang self-doubt, reframe post-project blues, and navigate conflicts by watching opposing AI voices expose blind spots.

- The method reduces AI’s tendency for sycophancy, giving leaders a safe space to test ideas before taking them to real colleagues.

📝 Prompt: Before your next big decision, spin up divergent perspectives—whether through AI or trusted peers—and let the arguments sharpen your judgment.

🔥 QUICK HITS:

(COMMUNICATION CONTEXT) Ashley Herd: Context Turns Info Into Leadership

- Sharing only the “what”—like a revenue target—creates fear and speculation; adding “why” links goals to strategy and purpose.

- Managers unlock motivation by translating broad objectives into the “how”: concrete, role-specific steps that connect daily work to outcomes.

- Practical tactics include adding context to updates, reframing questions to uncover drivers, and breaking goals down in 1:1s.

📝 Prompt: Next time you share a goal, add one sentence on why it matters and one on how your team can impact it.

(CULTURE ADVANTAGE) Josh Levine: AI Flattens, Culture Differentiates

- AI levels the playing field, making products, processes, and productivity advantages replicable in minutes.

- The remaining competitive edge is culture—how people think, care, and collaborate can’t be downloaded.

- Leaders must ground teams in purpose, spirit, guardrails, and empathy, treating culture as the operating system of the business.

📝 Prompt: This week, name one way you’ll show your team that empathy is as critical as efficiency.

👉 Read the full article from Josh Levine on why culture defines the future edge.

(AI AGENTS & TRUST) Jason Averbook: Software Collapses Into Agents

- Software is collapsing into agents: workflows replace systems, UIs vanish, and implementation cycles shorten in a true collapse moment.

- Trust is the new competitive edge as AI adoption erodes confidence; leaders must narrate change transparently to prevent fear and silence.

- Reskilling without redesign fails work models, structures, and leadership need an exponential reset, not incremental fixes.

📝 Prompt: Narrate one change your team is facing this week, don’t leave the silence to fill with fear.

👉 Listen to Jason Averbook’s full Now to Next EP4 for deeper insights on AI agents and trust.

I track what’s worth your attention—bringing you the news and updates that matter most to how we work, lead, and grow.

This week:

THE FUTURE OF WORKFORCE TRAINING

Skills, Not Jobs: AI Demands Training

- AI disruption is outpacing preparation: Workers expect AI could replace 31% of tasks, yet 82% of firms offer no training, fueling widespread anxiety.

- Training must go beyond technical skills: Successful programs address human fears and values as much as tool proficiency, as shown by Per Scholas and Year Up.

- Lifelong learning as infrastructure: Calls for portable skill wallets, digital credentials, and learning accounts to support reskilling across careers.

- Policy lagging market reality: Public workforce systems are underfunded, leaving nonprofits and employers to fill urgent training gaps at scale.

- Trust is key to adoption: Workers are more likely to embrace AI when leaders frame it as augmentation, not replacement, and model their own learning journeys

📝 Prompt: Model micro-behaviors that normalize learning: ask AI small questions in team meetings, share mistakes openly, and recognize colleagues who experiment. These visible acts signal that curiosity and practice matter more than perfection.

AI ADOPTION

Salesforce vs. Klarna: Two AI Paths

- Salesforce doubled down: CEO Marc Benioff cut 4,000 support jobs as Agentforce bots now handle 30–50% of work, but investors doubt revenue will keep pace, shares down 24% YTD.

- Klarna backpedaled: After bots replaced 700 service staff, the company is redeploying employees into support, balancing AI speed with human empathy ahead of its IPO.

- An MIT study found 95% of $30–40B AI spending delivered no measurable impact, underscoring that AI adoption requires constant recalibration.

📝 Prompt: When rolling out AI, listen for when people still crave a human voice and make sure they get it.

TEAM POWER

Good Teams > Brilliant Ideas

- Research shows teams, not individuals, create most new knowledge—yet we over-credit “lone geniuses” like Edison while forgetting collaborators.

- High-performing teams are small (3–7 people), diverse, goal-aligned, task-autonomous, and norm-driven (sharing ideas, experimenting, deferring judgment).

- A mediocre idea with a good team often beats a great idea with a bad team—structure, not charisma, drives innovation.

📝 Prompt: In your next project, amplify others’ ideas before pushing your own. You’ll unlock stronger collective solutions.

PERFORMANCE REVIEWS

Amazon Bakes Culture into Reviews

- Amazon now formally integrates its 16 Leadership Principles into reviews, using a three-tier system that ties culture to raises and PIPs.

- Only 5% of employees can achieve the top "role model" grade on culture, while stack-ranking still places 5% in least effective.

- CEO Andy Jassy’s push includes RTO mandates, fewer managers, AI-driven efficiency, and tighter reward systems, sparking debate over fairness and transparency.

📝 Prompt: In feedback conversations, name one specific behavior that shows cultural alignment, reinforce it so people know exactly what to repeat.

LEADERSHIP & LEARNING

HBR: Leaders Gain by Staying Amateurs

- Overreliance on mastery creates cognitive entrenchment, limiting creativity; neuroscience shows novelty builds neuroplasticity and agility.

- Strategic amateurism—pursuing unrelated beginner activities like painting or karaoke—rewires leaders for curiosity, openness, and receptivity.

- Leaders who embrace amateur pursuits cultivate humility and openness, strengthening trust and creativity across their teams.

📝 Prompt: Pick one activity outside your expertise this month. Let discomfort spark fresh thinking you can bring back to your team.

🔥 QUICK READ:

- BIG TECH AND TRUMP: At a White House dinner, Nadella, Altman, Pichai, Cook, and Zuckerberg endorsed Melania Trump’s AI education initiative, committing billions to training. Microsoft pledged $4B in AI services and free Copilot AI for U.S. students, and Google announced a $1B education fund. OpenAI will launch the OpenAI Jobs Platform by mid-2026, competing directly with LinkedIn on AI-driven hiring. It adds AI fluency certifications through OpenAI Academy, with a target of 10M Americans certified by 2030 in partnership with Walmart. The move supports the White House’s AI literacy initiative amid forecasts of 50% entry-level job loss by 2030. Tech leaders are aligning closely with administration priorities.

- TRUMP AND THE FED: President Trump’s nominee Stephen Miran seeks to hold a Fed board seat while on unpaid leave from the White House CEA, raising independence concerns. Democrats warn of conflicts, but Republicans back a quick confirmation before the Sept. 16–17 Fed meeting. Fired Fed governor Lisa Cook is suing to keep her seat, testing limits on presidential removal powers.

- CHINA’S AI CHIP TEST: China is accelerating homegrown chipmakers like Cambricon, Huawei, Enflame, and MetaX to reduce reliance on Nvidia. Cambricon projects only 143k units in 2025 versus Nvidia’s 1M H20 shipments in 2024, but Beijing rejected new H20 sales to push self-reliance. Xi’s AI+ initiative emphasizes “good enough” local chips to sustain adoption despite lagging top performance.

Your Friday Briefing on the Future of Work

Future Work delivers research-backed insights, expert takes, and practical prompts—helping you and your team capture what matters, build critical skills, and grow into a future-ready force.

Get all-in-one coverage of AI, leadership, middle management, upskilling, DEI, geopolitics, and more.

Unsubscribe anytime. No spam guaranteed.