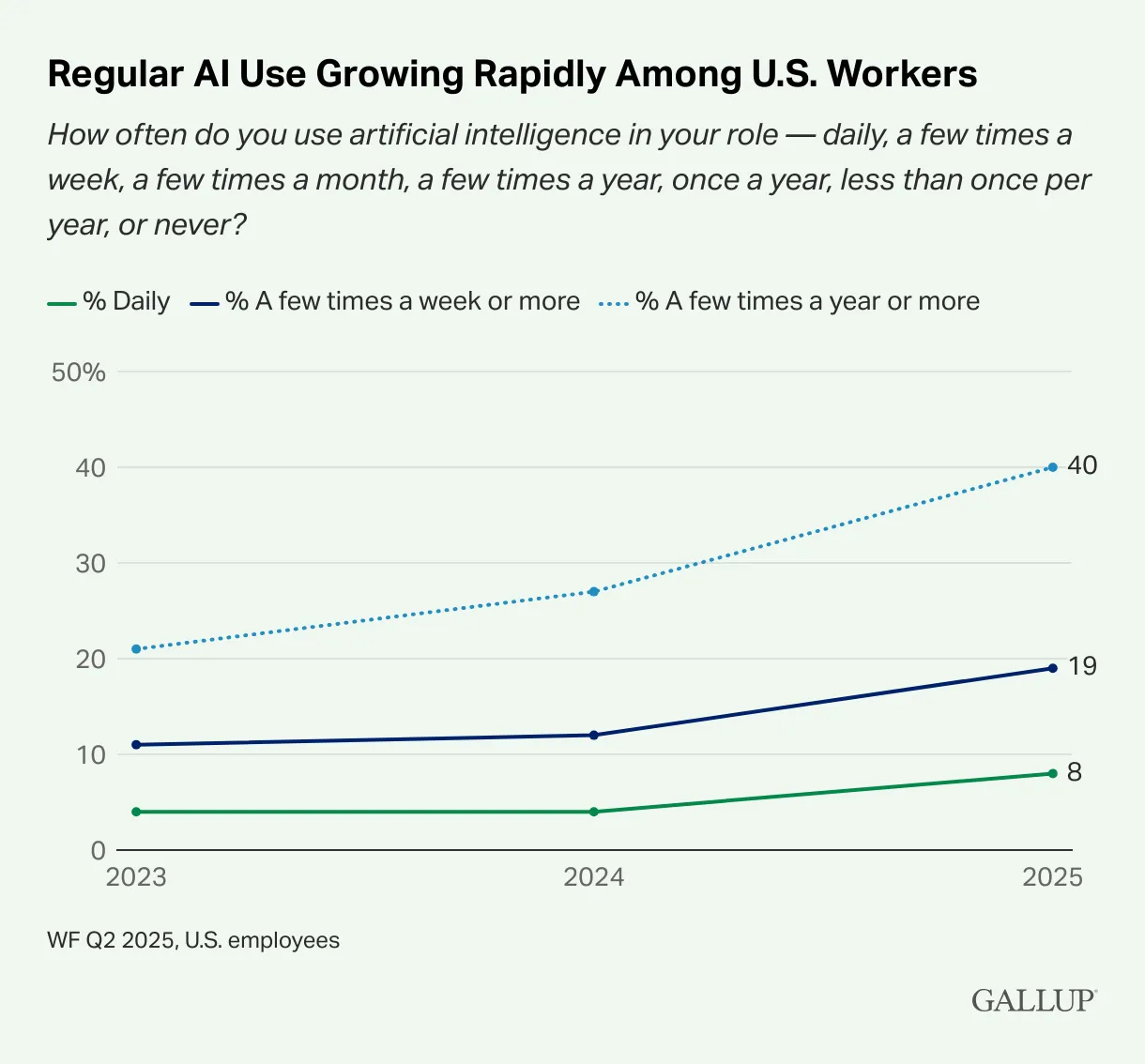

AI adoption is low. Lower than most of us “in the bubble” could imagine.

New Gallup data from this week show that only 8% of US employees use AI daily:

In my last post, “The AI Implementation Sandwich”, I shared that scalable, sustainable AI adoption depends on three layers: clear executive vision, empowered team-level experimentation, and connective “AI Lab” tissue in the middle.

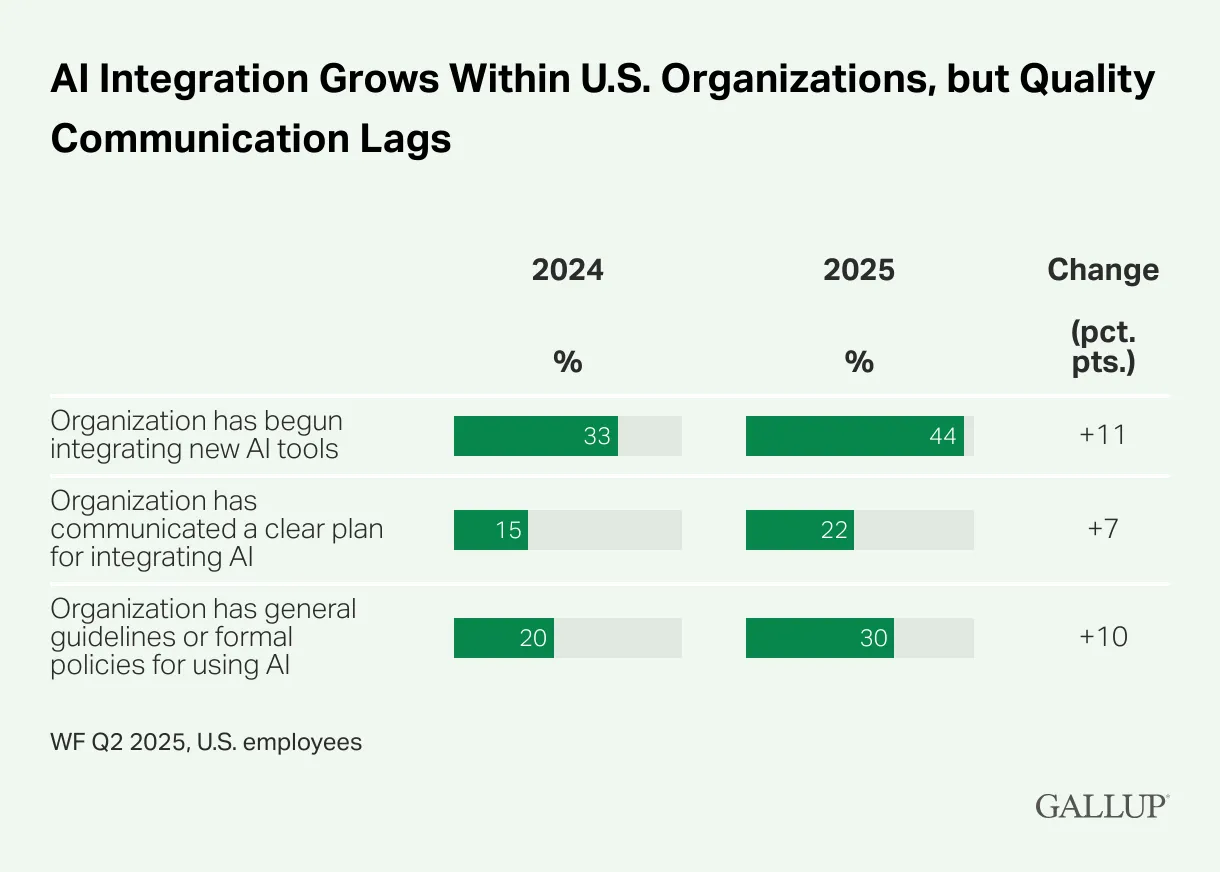

Well, a clear AI vision from the top is lacking in almost 80% of companies:

No vision, no adoption. Where is it going wrong?

During the 9th Lead with AI Executive Bootcamp last week, I hosted several candid, sometimes vulnerable conversations with leaders at the forefront of this transformation. The conclusion? One ingredient underpins AI success: trust.

Usually, we keep these conversations behind closed doors, but with the participants' approval, I’m excited to share some of the key insights.

AI Adoption Is a Cultural Issue

While AI platforms, tools, and models get the headlines, culture—and specifically trust—dominates the day-to-day reality of AI transformation.

As Stacy Proctor, a seasoned CHRO, put it:

“Trust is the foundation of everything. Do we trust employees? Do employees trust leaders? Does anyone trust AI? Trust is always the foundation of everything. And so when you’re talking with your CPOs, your CHROs—as one myself—I think it’s important that we always have that as part of the conversation. What are we doing to build trust in our organizations?”

AI makes trust more essential than ever.

Especially with layoffs (not due to AI, but happening in the context of an AI-enabled future of work) looming or already happening. As Shlomit Gruman-Navot shared from her HR practice:

“There is a lack of trust by employees, because they’re saying, ‘Oh, you’re just gonna use it in order to reduce workforce. Let’s be real. This is all about reducing headcount.’ It’s a valid point, because we are transforming the work. And if AI reveals that some tasks are no longer needed, that’s okay, but it doesn’t mean that you can’t do it also in the most human-centric way possible.”

But this is not new. As Dean Stanberry reminded us:

“I go back to the 1980s, when we started shifting away from companies that had lifetime employment and humans became disposable. Do we have trust today in corporations? If you believe that they can dismiss you at a moment’s notice for no particular reason, and disrupt your entire life—basically, how do you trust an organization in the current environment?”

AI Adoption and the Culture of Psychological Safety

True AI-first organizations foster psychological safety, the ability to experiment, ask “stupid” questions, and even fail, without fear of judgment or reprisal. This is where many companies stumble.

Alison Curtis, a leadership trainer, sees this play out daily:

“AI creates psychological safety for us as humans to experiment with our thinking. And as humans, we haven’t quite got that right. So one of the biggest hindrances, I think, to workplace efficiency is fear, and the fact that people don’t come forward with their best thinking for fear of judgment or not being accepted.”

I see this even in many AI workshops and client projects: people use AI “in secret,” fearing that if they admit it, “I’m going to get more work, or be seen as someone who’s slacking or taking shortcuts.”

The culture has to change to one where ChatGPT is a compliment, not an insult. People who use it should be celebrated and feel excited to share their successes and challenges.