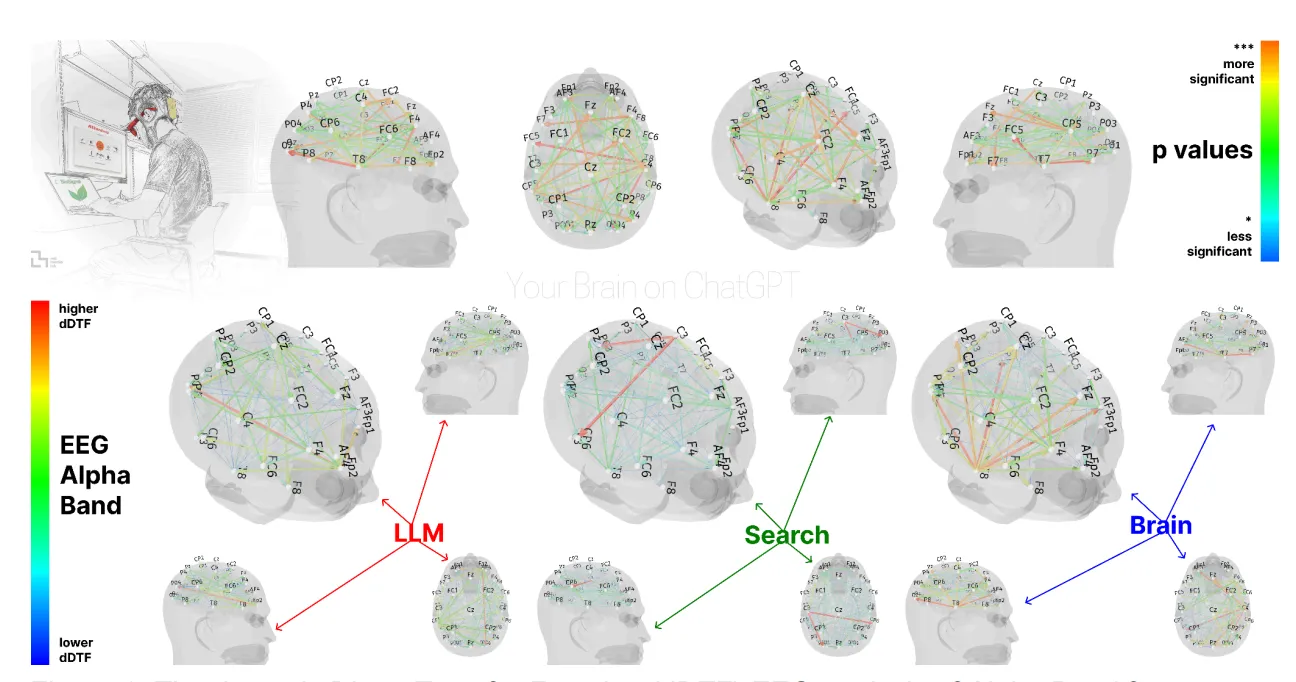

A new MIT Media Lab report, “Your Brain on ChatGPT,” found that frequent AI users not only recalled less from their writing but also showed less executive activity during tasks.

In other words, they were letting the machine ‘take over.’

Within days, the media picked up on the study with a variety of headlines in the realm of “AI Will Make You Stupid,” and the LinkedIn thinkfluencers were off to the races.

But the real story, buried beneath the clickbait, is more complex, and far more actionable for leaders: How do we intentionally collaborate with AI, rather than unconsciously offloading our most valuable skills?

Let’s dive in and break down what the MIT experiment actually found, and how to avoid the “cognitive debt” trap.

What the experiment actually showed

Let’s get the facts straight first.

Fifty-four adults from the Boston region between 18 and 39 years old wrote three timed SAT-style essays while wearing 32-channel EEG caps. (Sadly, no photos were shared.)

Groups used either only their own brains, Google Search (explicitly excluding “AI Overviews”), or ChatGPT-4o.

The AI group produced decent essays the fastest. But, also showed the weakest connectivity between their alpha- and beta-bands, the neural signature of something called “executive engagement,” higher-level cognitive functions like attention, working memory, and decision-making.

In plain English: the less those brain regions “talked” to each other, the more the heavy lifting was outsourced to the AI, not to the writer’s own mind.

These AI-fueled essayists also produced the “most formulaic language,” and the poorest recall of their own text. In other words, forgettable “AI slop.”

Four months later, the gap had widened, leading the authors to frame a “cognitive-debt spiral.”

But for full context:

- This was a small, homogeneous sample. Fifty-four educated Bostonians do not represent all of us, including students, senior specialists, or multilingual and cultural teams. For the final assignment, on which the conclusions are based, only 18 people came back.

- The researchers focused on total work. The authors “did not divide our essay writing task into subtasks like idea generation, writing, and so on, which is often done in prior work.”

- The study wasn’t peer-reviewed yet. Released in pre-print, peer reviews have not yet taken place, which may require a larger sample and an expansion of the tests conducted.

Now, none of these caveats removes the fact that under-engagement was observed.

Especially since these findings build on what’s been proven before:

- A Microsoft–Carnegie Mellon survey of 319 knowledge workers found that higher confidence in AI answers was associated with lower effort in critical thinking, while higher self-confidence was associated with the opposite.

- A December 2024 laboratory trial involving 117 university students warned of “metacognitive laziness”: learners who relied on ChatGPT to revise essays spent less time planning or monitoring their work and retained less of the material.

And let’s not forget that this isn’t specific to just AI either.

In one study, frequent GPS users showed reduced hippocampal activity and weaker spatial memory, whereas London taxi drivers, who build mental maps, displayed enlarged hippocampi.

Different technology, same principle: passive use erodes skill; active use sharpens it.

And this is exactly the point.