Coaching over 1,000 business leaders, it stands out to me that we remain distracted by hype and don’t focus enough on foundational AI knowledge.

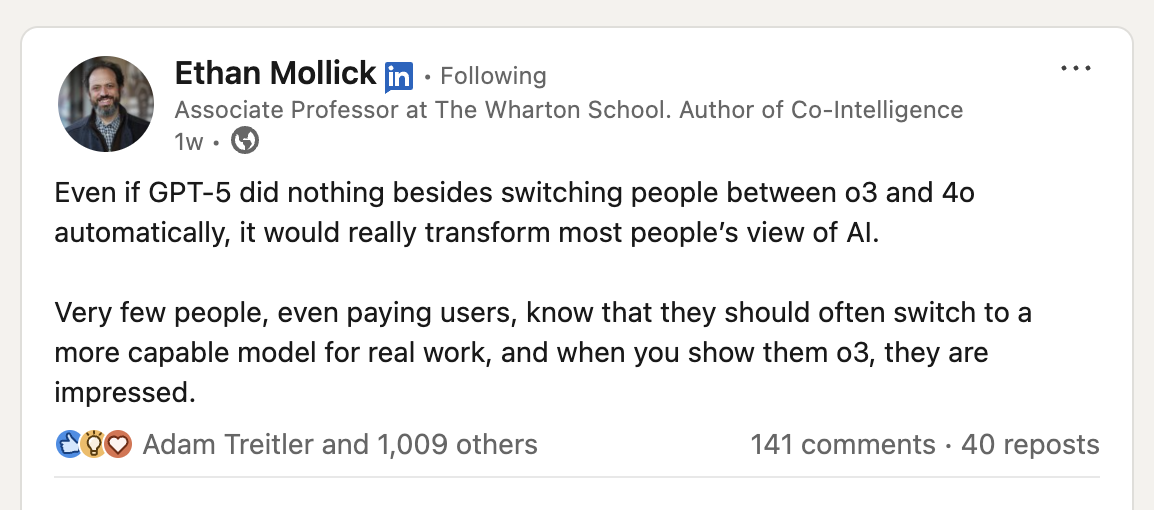

Case point: everyone is buzzing about the upcoming ChatGPT-5, but as Ethan Mollick highlighted, people are barely using what ChatGPT can already do today:

In this 10-part series, I want to dive into all of the ways that we’re underutilizing the capabilities of AI as it stands today, starting with Reasoning Models.

What AI tool or feature would you like me to highlight? Reply and I’ll try to include it.

10x Your AI Results, Lesson 1: Welcome to the Reasoning Era (ChatGPT-o3)

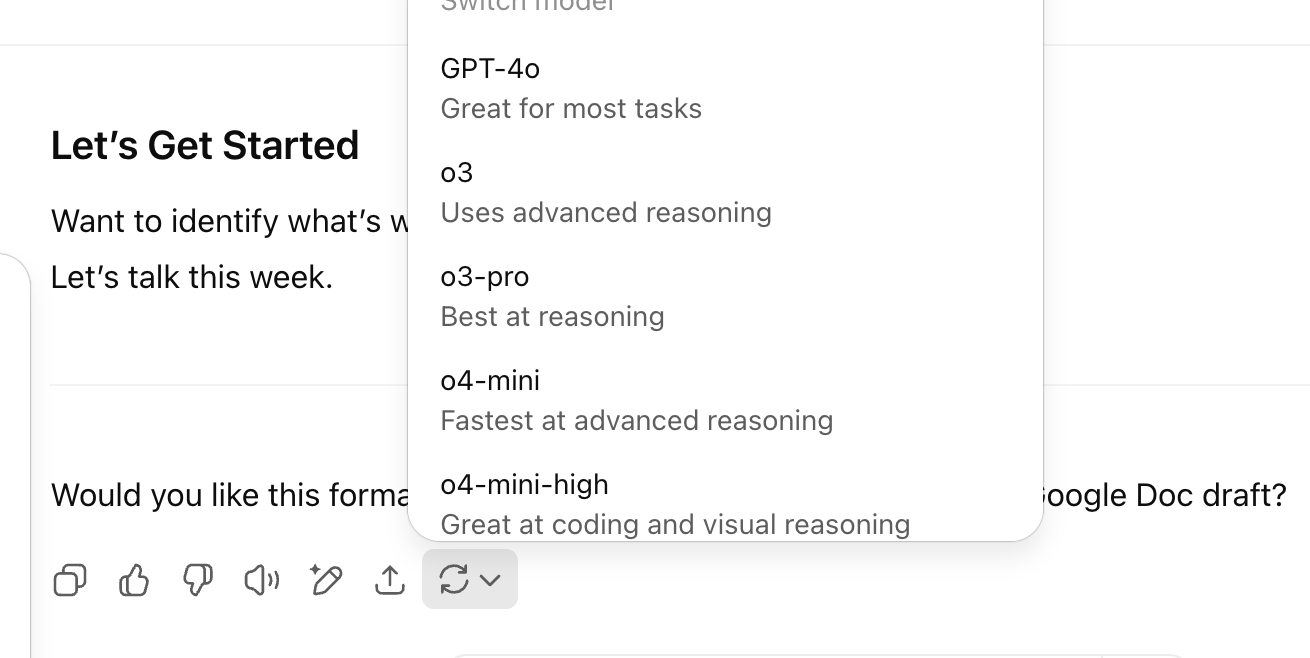

Most people using ChatGPT today are stuck interacting with the default 4o model.

This model is great for writing, editing, and answering general knowledge questions.

But it has a key limitation: it doesn’t “think,” pause, reason, or problem-solve. It completes your prompts based on training, not logic.

That’s fine for surface-level tasks. But if you ask for multi-step decisions, deep analysis, or nuanced trade-offs, it can struggle or just hallucinate solutions.

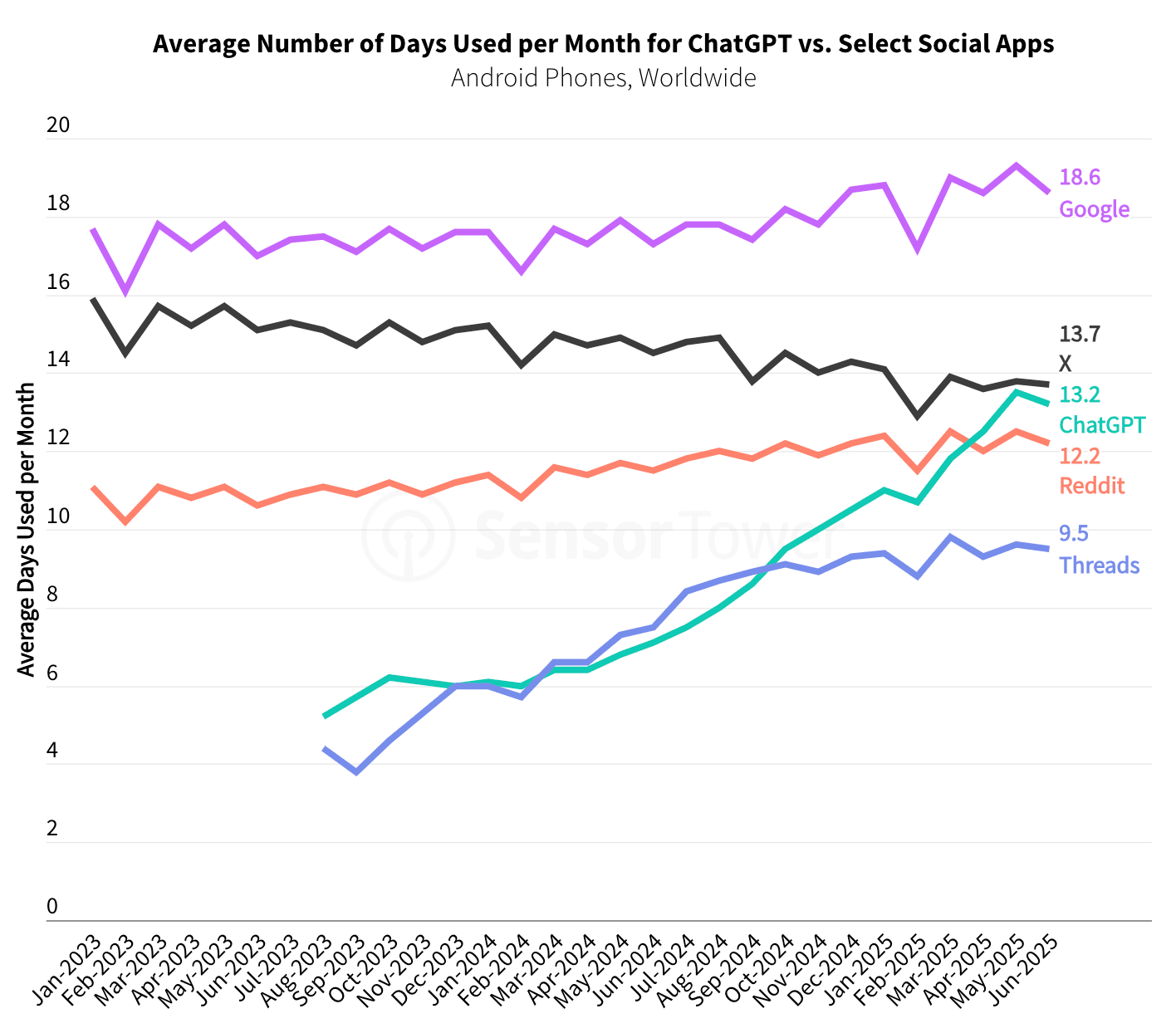

That’s where reasoning models like OpenAI’s new o3 come in. (It’s not the only reasoning model, but ChatGPT happens to be the dominant AI platform by miles.)

o3 isn’t just the next version of ChatGPT. Its introduction in April 2025 represented a shift in AI capabilities.

Instead of instantly generating an answer, o3 “thinks aloud” internally, simulating reasoning before speaking. It might research something, run a calculation, or evaluate multiple ideas before choosing what to respond.

This leads to you getting more accurate, insightful, and decision-ready responses, especially on complex or unfamiliar tasks.

Your First Day with o3

If you haven’t tapped o3’s capabilities, then here are a few things to know before starting to use it.

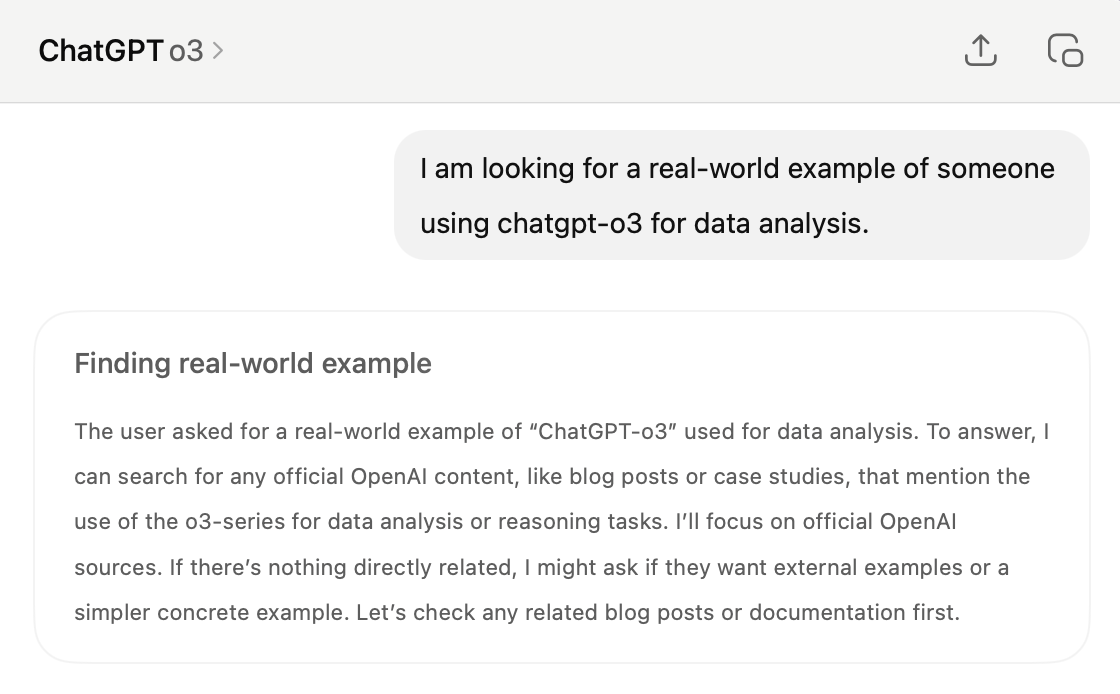

o3 uses “Chain-of-Thought” Reasoning

Ask a tough question, and it will silently break the problem into steps, something prompt engineers call chain of thought.

This makes it especially useful for planning, coding, math, research, and logic-heavy decisions.

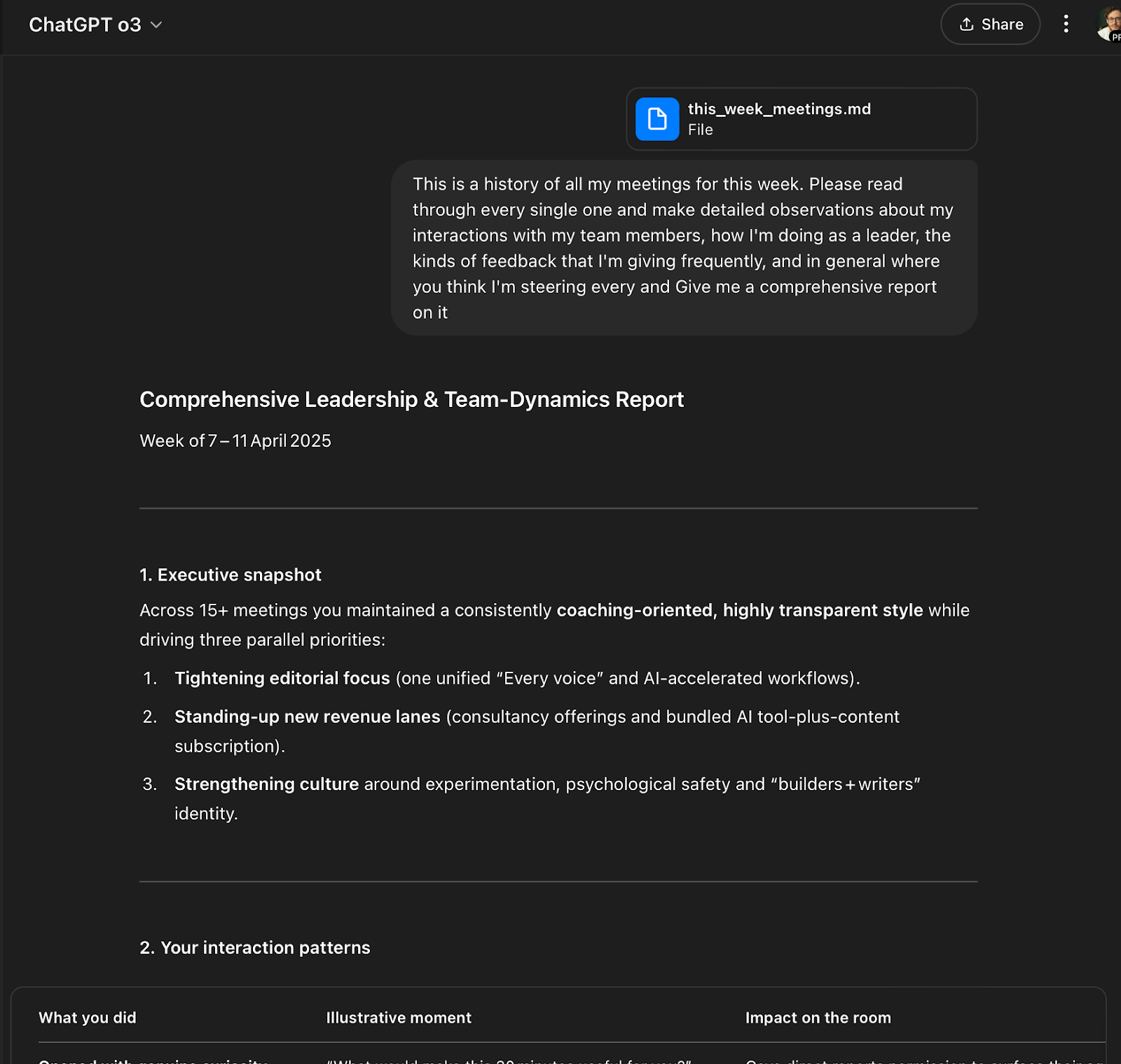

It Can Use Tools Autonomously

o3 can search the web, analyze data files (CSVs, PDFs), interpret images, and even run calculations, without you having to manually direct it to do so.

It’s Multimodal

Like 4o, it’s multimodal. That means your prompt doesn’t have to be text only.

You can upload a chart, contract, photo, or screenshot and o3 will read and reason over it. It’s the only reasoning model with vision built in.

It Takes Its Time (and That’s a Feature)

o3 often spends 30 seconds to 2+ minutes thinking before answering. That’s not a bug. That’s the reasoning process happening before you see anything.