Earlier today, when I delivered a workshop on “What’s Best, and What’s Next for AI” to over 50 senior leaders from Nordic companies, the topic of AI implementation came up.

How is it that company-wide AI transformation is so hard to pull off?

A report from MIT’s Media Lab NANDA initiative, titled “The GenAI Divide: State of AI in Business 2025,” gives us a few valuable insights.

Data drawn from 300 publicly disclosed AI initiatives, 150 structured interviews with leaders, and a survey of 350 employees, the paper made headlines through its crucial finding that 95% of enterprise generative-AI pilots deliver no measurable ROI.

But this is not a story about how the technology is flawed. It’s one of the organizational and operational integration issues, like broken workflows, lack of feedback loops, and poor alignment with business needs.

MIT Paper: Why Most Projects Falter

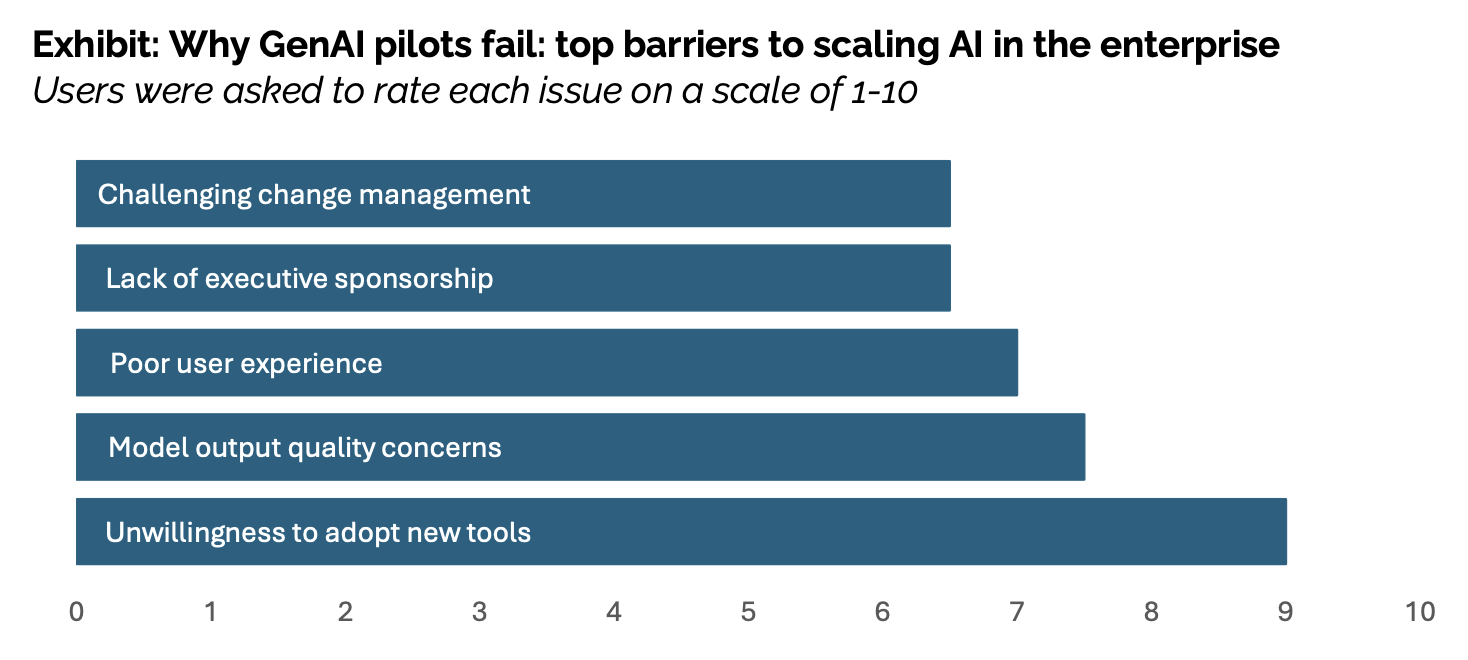

So why do so many AI implementations fail? The paper found 5 key reasons:

1. The “Learning Gap”

Most deployments use generic tools like ChatGPT that don’t adapt or learn from context, so they don’t integrate into workflows or retain business- or role-specific knowledge.

Many of us expected AI to become smarter over time, but quickly learned that memory, if any, was very limited.

This is what the paper calls “the primary factor” that keeps organizations from winning with AI.

And this is in big part because ‘consumer AI’ is so stellar in its experience and quality of outputs that enterprise-sanctioned tools disappoint right away.

2. Build vs. Buy

Building internal AI solutions shows a much lower success rate (~33%), while purchasing from specialized vendors or partnerships succeeds ~67% of the time.

This is remarkable and highlights how the user experience is crucial in real AI impact.

While we have seen remarkable success stories of in-house tools, like in the case of McKinsey’s Lilli, the truth is that while data privacy and security matters, not everyone can pull off a successful AI platform.

3. Budget Misalignment

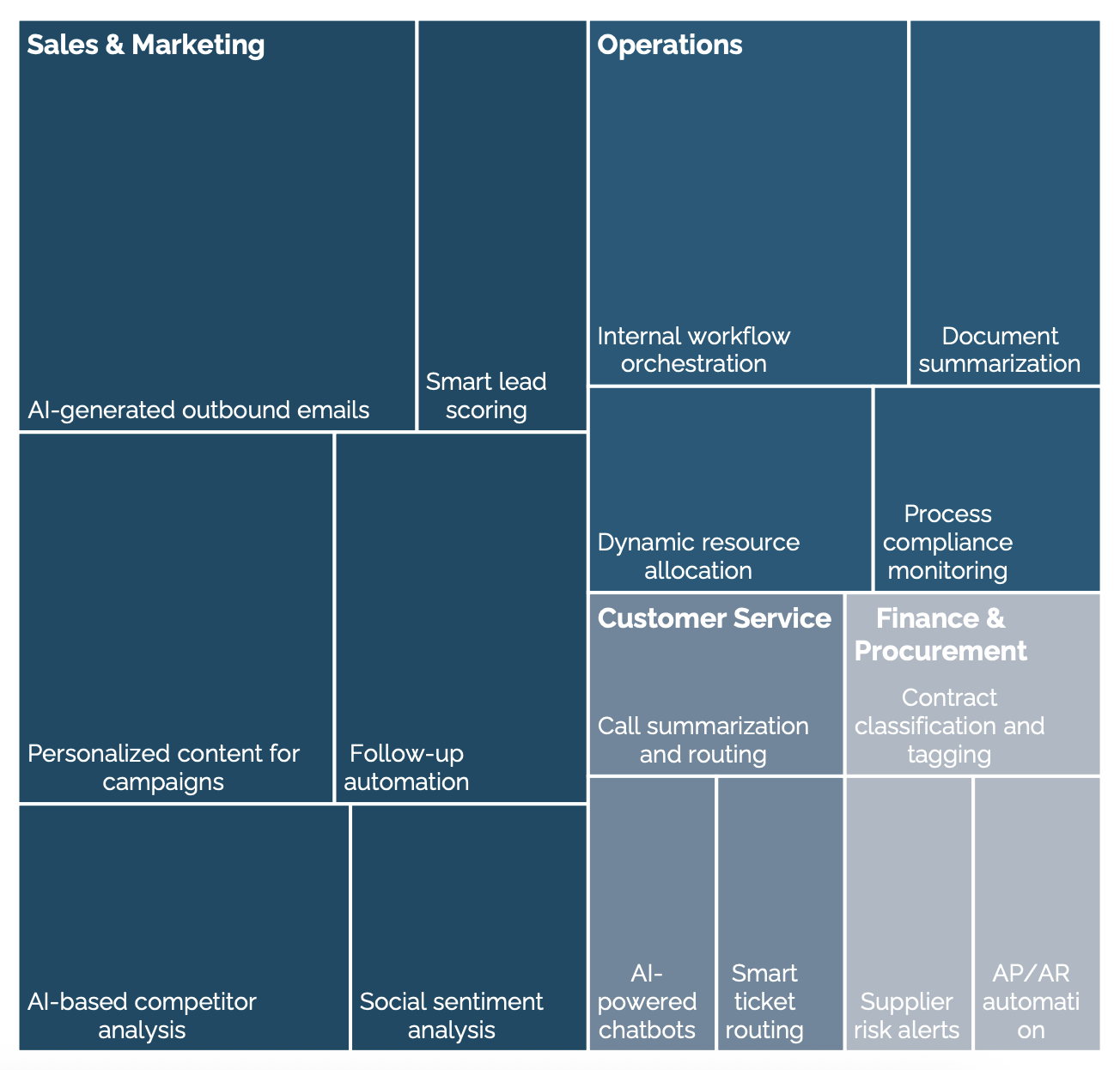

Over 70% of generative AI budgets flow into sales and marketing tools, with low ROI. Higher-impact areas, like back-office automation, logistics, and fraud detection, are underfunded.

I know, it’s tempting to buy into shiny tools, but this is a good reminder that AI excels at rote tasks in unsexy workflows. Even though it’s not always easy to measure impact.

A VP of Procurement commented on the challenge of where to place AI bets: "If I buy a tool to help my team work faster, how do I quantify that impact? How do I justify it to my CEO when it won't directly move revenue or decrease measurable costs? I could argue it helps our scientists get their tools faster, but that's several degrees removed from bottom-line impact."